Simple Socket Server and Client

Learn how to implement a simple multi-threaded TCP server and client in Java for future use in databases. Also perform benchmarking with a variable number of threads for both the server and client.

Introduction

In this project we’re going to implement a simple socket server as the starting point for building databases. This server will only do one thing: read a fixed number of bytes from a client connection and respond with the identical bytes. I.e., it will perform echoing. We can even think of this server as a fake, read-only DB that receives a get request for a key and returns a fake value. In future projects, we’ll use the techniques explored in this project to develop database servers that actually perform actions based upon their input.

You can find the full code for this project within the DBFromZero Java repository on github to read, modify and run yourself.

Developing the Server

We use the

ServerSocket

to open a

socket for listening within our server. For a single-threaded server, we then use a loop to process individual

connections as they arrive using the

ServerSocket.accept()

method. This method returns a

Socket

for communication with the client. For each connection, we simply read a fixed number of bytes

and then write back the same bytes received.

public static void runServerSingleThreaded() throws Exception {

try (var serverSocket = new ServerSocket(PORT, BACKLOG, InetAddress.getByName(HOST))) {

while (true) { // break out of loop when ServerSocket.accept throws IOException

try (var socket = serverSocket.accept()) {

var buffer = new byte[MSG_LENGTH];

readArrayFully(socket.getInputStream(), buffer);

socket.getOutputStream().write(buffer);

}

}

}

}

private static void readArrayFully(InputStream s, byte[] bs) throws IOException {

int i = 0, n;

while (i < bs.length && (n = s.read(bs, i, bs.length - i)) != -1) {

i += n;

}

if (i != bs.length) {

throw new RuntimeException("Failed to read full message. Only read " + i + " bytes");

}

}

To process multiple connections concurrently, we can use threading. This is best accomplished with a

ThreadPoolExecutor, which maintains a pool of threads for reuse. We still accept connections within our main thread and then pass off

each received

Socket

to the executor for running in a different thread.

public static void runServerMultiThreaded() throws Exception {

var executor = Executors.newFixedThreadPool(N_THREADS);

try {

try (var serverSocket = new ServerSocket(PORT, BACKLOG, InetAddress.getByName(HOST))) {

while (true) { // break out of loop when ServerSocket.accept throws IOException

var socket = serverSocket.accept();

executor.execute(() -> processConnection(socket));

}

}

} finally {

executor.shutdownNow();

executor.awaitTermination(1, TimeUnit.SECONDS);

}

}

private static void processConnection(Socket socket) {

try (socket) {

var buffer = new byte[MSG_LENGTH];

readArrayFully(socket.getInputStream(), buffer);

socket.getOutputStream().write(buffer);

} catch (IOException e) {

e.printStackTrace();

}

}

Developing the Client

To connect to the server, we use the

Socket

class. We then simply

access the socket's streams to send and then receive a fixed number of bytes.

public static void runClientSingleThreaded() throws Exception {

var sendMsg = new byte[MSG_LENGTH];

var recvMsg = new byte[MSG_LENGTH];

var random = new Random();

while (true) { // run until process is terminated

random.nextBytes(sendMsg);

try (var socket = new Socket(HOST, PORT)) {

socket.getOutputStream().write(sendMsg);

readArrayFully(socket.getInputStream(), recvMsg);

}

if (!Arrays.equals(sendMsg, recvMsg)) {

throw new RuntimeException(String.format("Inconsistent messages %s %s",

Hex.encodeHexString(sendMsg), Hex.encodeHexString(recvMsg)));

}

}

}

We can make some simple modifications to run multiple client connections concurrently using threads.

public static void runClientMultiThreaded() throws Exception {

var threads = IntStream.range(0, N_THREADS)

.mapToObj(i -> new Thread(() -> {

var random = new Random();

var sendMsg = new byte[MSG_LENGTH];

var recvMsg = new byte[MSG_LENGTH];

try {

while (true) { // run until process is terminated

random.nextBytes(sendMsg);

try (var socket = new Socket(HOST, PORT)) {

socket.getOutputStream().write(sendMsg);

readArrayFully(socket.getInputStream(), recvMsg);

}

if (!Arrays.equals(sendMsg, recvMsg)) {

throw new RuntimeException(String.format("Inconsistent messages %s %s",

Hex.encodeHexString(sendMsg), Hex.encodeHexString(recvMsg)));

}

}

} catch (IOException e) {

e.printStackTrace();

}

})).collect(Collectors.toList());

threads.forEach(Thread::start);

for (var thread : threads) {

thread.join();

}

}

Benchmarking

Now that we have developed a multi-threaded server/client pair, we want to benchmark the performance of these simple systems. Specifically, we want to vary the number of threads for each component and measure the number of messages transmitted. To this end, a mini benchmarking suite is developed consisting of a driver thread that iterates through a collection of parameters, runs the server and client for a fixed interval of time, and records the number of received messages. The server and client run on the same host and communicate through the loop back interface (i.e., localhost).

The multi-threaded server and client example code that we've seen so far is adapted to run within benchmark driver. Adaptations are made to the code so that we can cleanly shutdown each component without having to terminate the JVM. A chief concern is managing shared state within this code and to that end atomic wrappers are used. Additionally, the client is modified to run connector threads for a specific period of time while counting the number of transactions. You can checkout the code in the repo to see the details of adaptations from the examples we've explored so far.

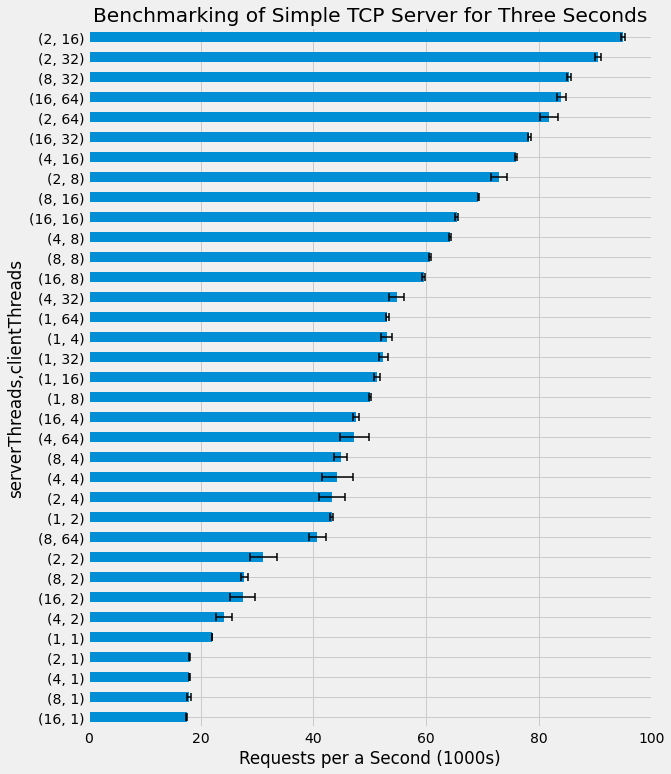

In the benchmarks, the number of threads for each component varies by powers of two. For the server, we use maximum of 32 threads and from client the maximum is 64. These values were chosen from some preliminary benchmarking that showed server performance tapering off faster than client performance as the number of threads increased. All combinations of server/client thread counts are tested in these benchmarks. A duration of 3 seconds of client activity is measured in each trial run and a total of 10 trials are performed for each server/client thread count pair. This leads to a total of 350 trials

The benchmarking code can be ran locally for development and testing. To standardize the benchmarks, an AWS EC2 VM is used to run the code for reported results. The specs of this host are as follows.

- Instance type : c5n.2xlarge

- vCPUs : 8

- RAM : 21 GiB

- OS Image : Ubuntu 18.04 LTS - Bionic

- Kernel : Linux version 4.15.0-1065-aws

- JRE : OpenJDK 64-Bit Server VM version 11.0.6

- net.ipv4.tcp_tw_reuse : Enabled to prevent port exhaustion due to TIME-WAIT ( details )

Analysis of Benchmarking Results

A simple analysis is performed consisting of aggregating the different trials for the same number of server/client threads to compute the median number of messages transmitted as well as the 25% and 75% percentiles. The results are summarized in the following figure.

The main take away from these results is that we don't make out transmissions with the largest number of threads for either the server or the client. This might be attributed to insufficient hardware resources to run all of the threads concurrently. Additionally, the hardware and kernel limits the local network performance such that threads may have to wait on socket IO even if there is sufficient user-space CPU resources.

Nonetheless, it's impressive that we could achieve over 95,000 transmissions per a second with these simple server/client components.

Discussion and Next Steps

In this project we developed simple multi-threaded server/client systems and learned a bit about the Java socket networking utilities for developing such systems. We'll apply similar methods in future projects as we start to develop systems that look more like actual databases. Additionally, we performed some simple benchmarking to quantify the performance of these systems with different thread counts. These benchmarking results will serve as a useful idealized baseline in future projects where the database server has to perform some actual data storage and access. We'll use this baseline to quantify how much these data operations contribute to system performance.

It's worth noting that the performance of these systems could likely be greatly improved by reusing individual socket connections rather than opening a new socket each time. We'll explore these implications in a future project where we will implement a RESTful HTTP server and client, and then benchmark the effect of HTTP connection reuse.

In our next project, we'll build off this server/client system to build a Simple In-Memory Key/Value Store.